Tuning Relevance

This tutorial assumes you have have finished the Setting Up Your First Case tutorial. It is split into two sections: Creating Judgements and then Tuning Relevance.

Creating Judgments

In order to do relevancy tuning, you must have some judgements available to you to evaluate your search quality against.

While you can judge documents right in a Case, in general it's better to use a Book to manage the judgements and let Subject Matter Experts or Expert Users do the judging work!

Making Binary Judgements

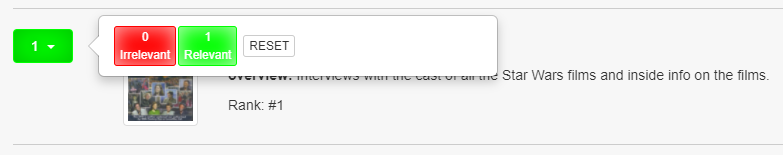

Let's start with the star wars query by clicking it. The list of movies matching the star wars query will be shown. To the left of each movie search result is a gray box. We use this box provide a relevance judgment for each movie. Click the Select Scorer link and click P@10. This allows us to make a binary judgment on each search result and label each as either relevant (1) or not relevant (0). Now, for each search result, look at the fields returned (that we selected when we created the case) and click the gray box. If you think this search result is relevant to the query click Relevant. Otherwise, click Irrelevant

Congratulations! You have just provided a human judgment to a search result!

Graded Judgements for Nuance

As you probably know, search relevancy is often not a binary decision. Sometimes one result is more relevant than another result. We want to see the movie Star Wars returned above Plastic Galaxy: The Story of Star Wars Toys. Both movies are relevant, but the former is more relevant.

We'll now change the Scorer we are using to one that supports Graded Judgements. Click the Select Scorer link and select the DCG@10 option and click the Select Scorer button. With this scorer selected for our Case we can judge our search results on a scale of 0 (poor) to 3 (perfect).

Don't worry about what the scorers actually mean - that is out of scope for us as a human rater. We will leave the scorers to the search relevance engineers!

Look at the title and overview of the first search result. Is this movie relevant for the query star wars? Click the gray box by the movie and make your selection. Is it a poor match, a perfect match, or somewhere in between? There is likely no correct answer - two people may give different answers and that's ok. What's important is that you take your time and provide your judgments consistently across queries and search results.

Who is keeping score?

As you assign judgments, you will likely notice the score at the top left of the page changes. It's important to remember you are not trying to get a high score, a low score, or any particular score. For our efforts as a rater we are not at all concerned about a score. The search relevance engineers will know what to do with the score! Just remember, it is not your goal or responsibility to have any influence on the score.

The score is not a reflection of your efforts.

Tuning Relevance

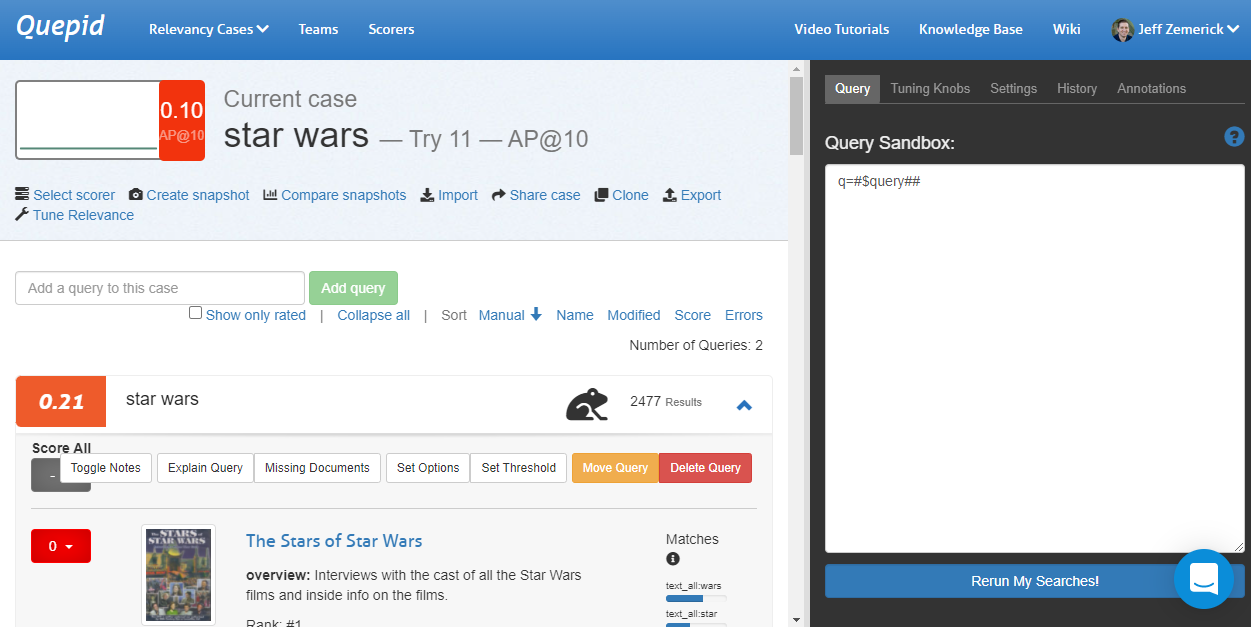

Now let's do some search tuning! Our star wars query returns movies that are at least somewhat related to the Star Wars movies but we are probably wanting to see the actual Star Wars movies first instead of movies such as 'The Stars of Star Wars' and 'Plastic Galaxy: The Story of Star Wars Toys.' So let's do some tuning to see if we can improve our search results. We are using the AP@10 scorer. With our human judgments, our score for the current query is 0.21. Click the Tune Relevance link to open the side window and the Query tab.

Let's use our search engineering knowledge to improve our query's score! Improving our search is heavily an experimental and iterative process. We may try some things that work and some things that don't work and that's ok! We can learn from what doesn't work in our iterations. To start, let's change our search to use phrase fields pf to boost results where the search query (star wars) appears in the title. We'll also use edismax. Now, our query looks like:

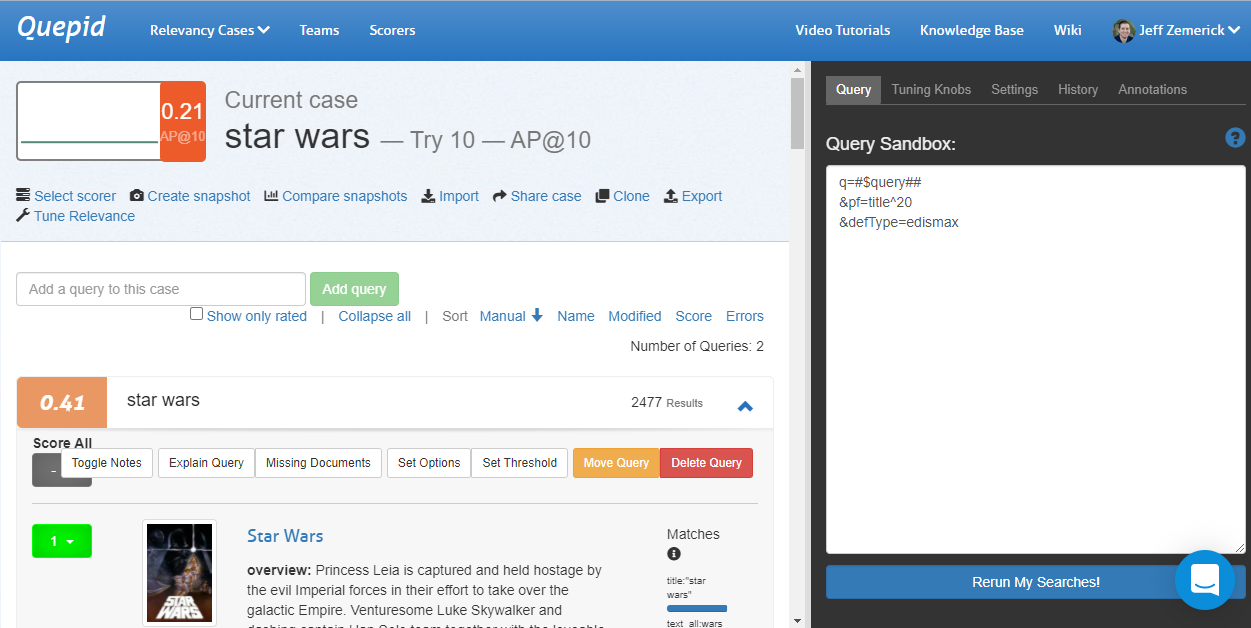

q=#$query##&pf=title^20&defType=edismax

Click the Rerun My Searches button to run our new query. Let's look at our search results now. The first result is the movie Star Wars so that's great and an obvious improvement! The AP@10 score has increased to 0.41. The second movie is 'Star Wars Spoofs' and 'Battle Star Wars.' Are those movies what our searchers want to see? Probably not. The other Star Wars movies would be better results.

Let's iterate on our query and see if we can improve some more. What should we try? Maybe there are other fields in our schema that we could be using. The TMDB database has agenres field that contains a list of genres for each movie. We can try restricting our search results to just action movies using the genres field. This will help remove some of the irrelevant movies from the search results.

q=#$query## AND genres:"Action"&defType=edismax

This provides a huge gain in our score although we might have a few frogs indicating there are new results that have not been judged. Will we always know the user is searching for action movies? Maybe, maybe not - it depends on your use-case. This is just an example of how we can use Quepid to iterate on our queries.

What do the frog icons mean?

The little frog icon tells you that you have some documents for a query unrated, and how many of them. The more unrated documents, the less confident you can be as a Relevancy Engineer that you have accurate metrics.

Understanding How the Query was Processed

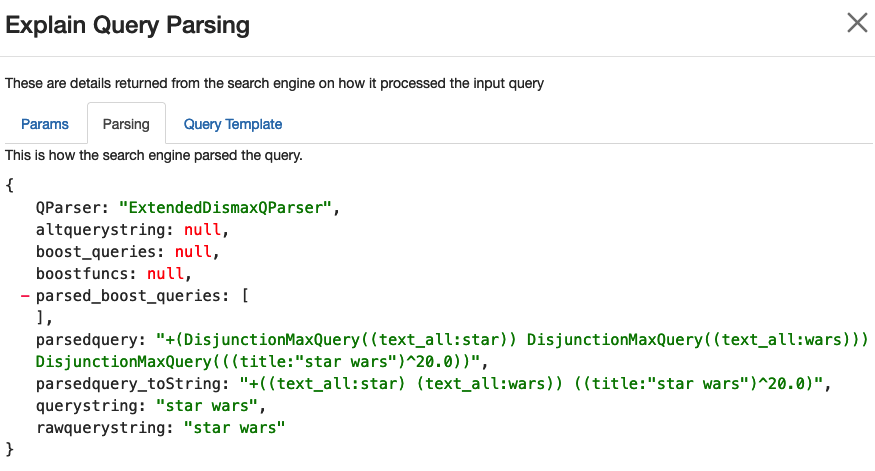

It can be helpful to understand how the original user query was processed. Clicking the Explain Query button will open a window that has some panes to help you understand what happened to the query. The first pane is the Params listing. It is showing exactly what parameters were sent to the search engine for the query, and can help you understand what other influences are made on the query.

The second panel, Explain Query Parsing shows how the original user query was parsed into the final query. Especially for multi word queries you can see how they are expanded into a much longer query, and can help explain why some seemingly random documents might get match!

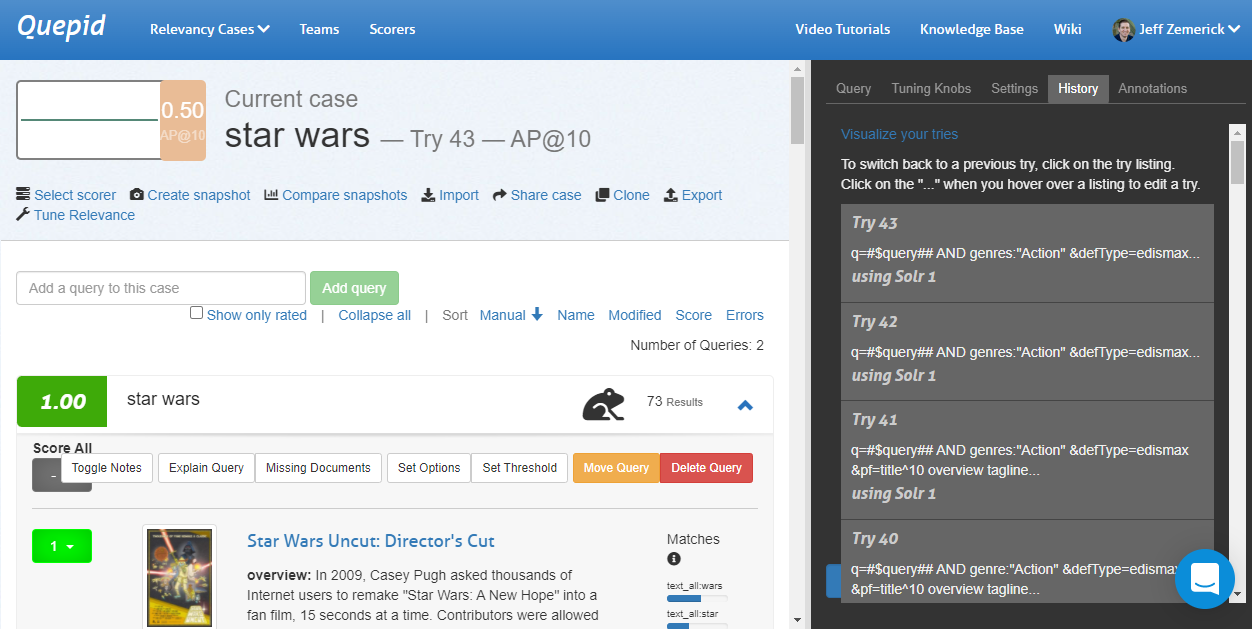

Viewing the Query Tuning History

As we iterate, it can be helpful to see what we have previously tried. The History tab will show the history of our previous queries.

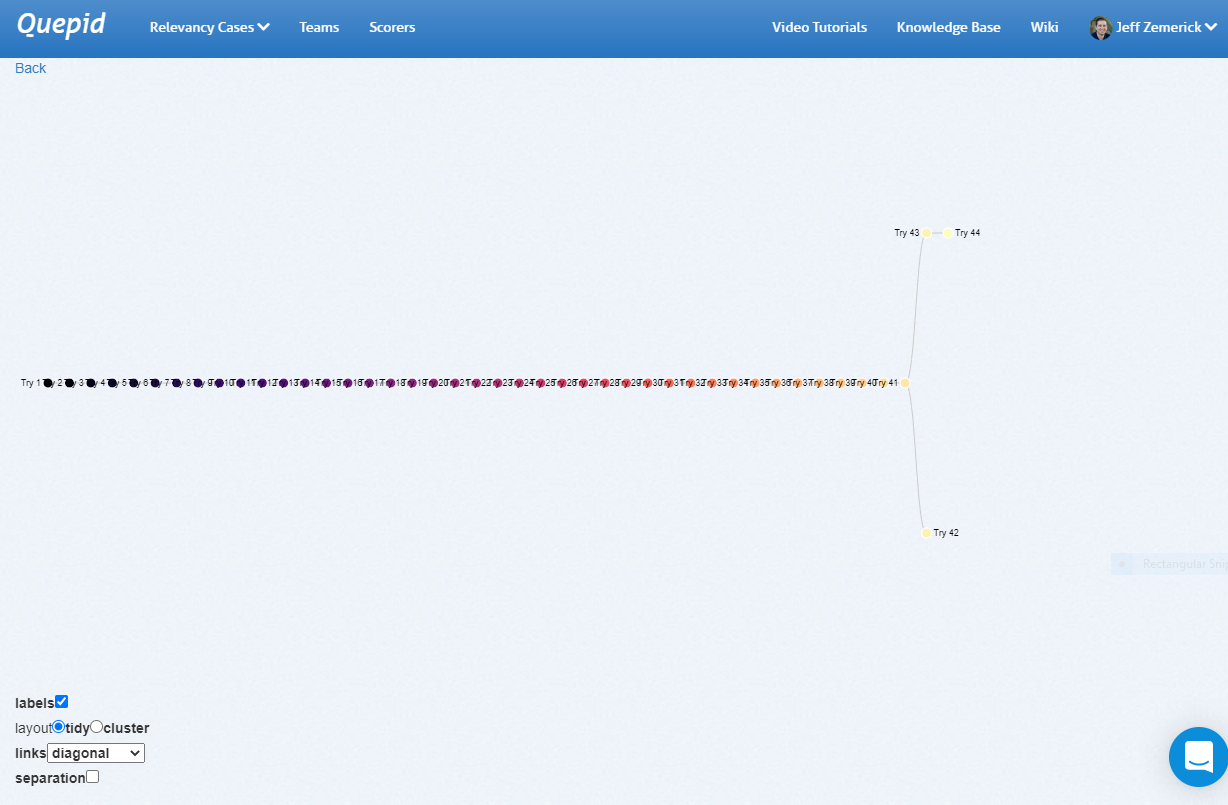

Since every Try is related to it's parent Try, you can visualize them in a tree format.

Under the History tab at the top you will see a Visualize your tries link. Clicking this link opens a new window that shows a visual representation of your query history. Hover over each node to see the query that was run. Clicking on a node in the visualization will re-run that query.

The history link visualization provides a powerful way to examine your previous queries. As part of Quepid's goal to reduce experiment iteration time, the history link visualization can help you reduce the iteration time by showing a comprehensive look at your past iterations and helping you to not inadvertently duplicate any previous iterations.

Here is an example of a straight line set of Tries that eventually branched:

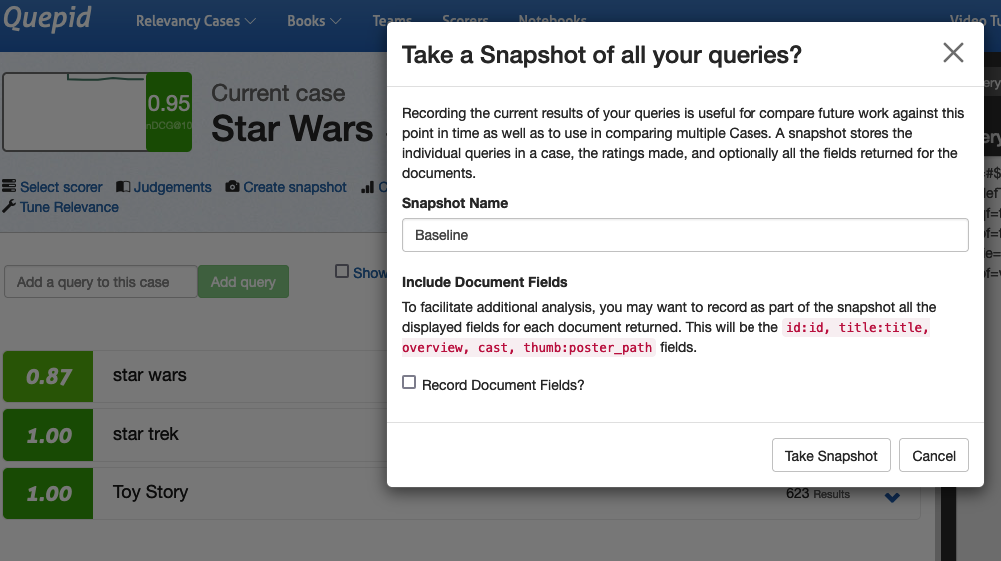

Measuring your Overall Progress

Snapshots are how Quepid lets you take a point in time and make it comparable to support seeing how things are changing. Is your search relevancy improving, is it declining? Snapshots provide you with that knowledge.

After changes to the query have been made and you are at a point where you would like to record your progress, click the Create snapshot link. Give the Snapshot a name like Baseline.

Having snapshots available as you work will allow you to compare your progress over time.

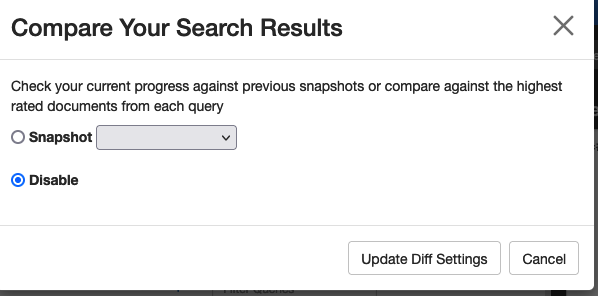

To compare snapshots, click the Compare snapshots link.

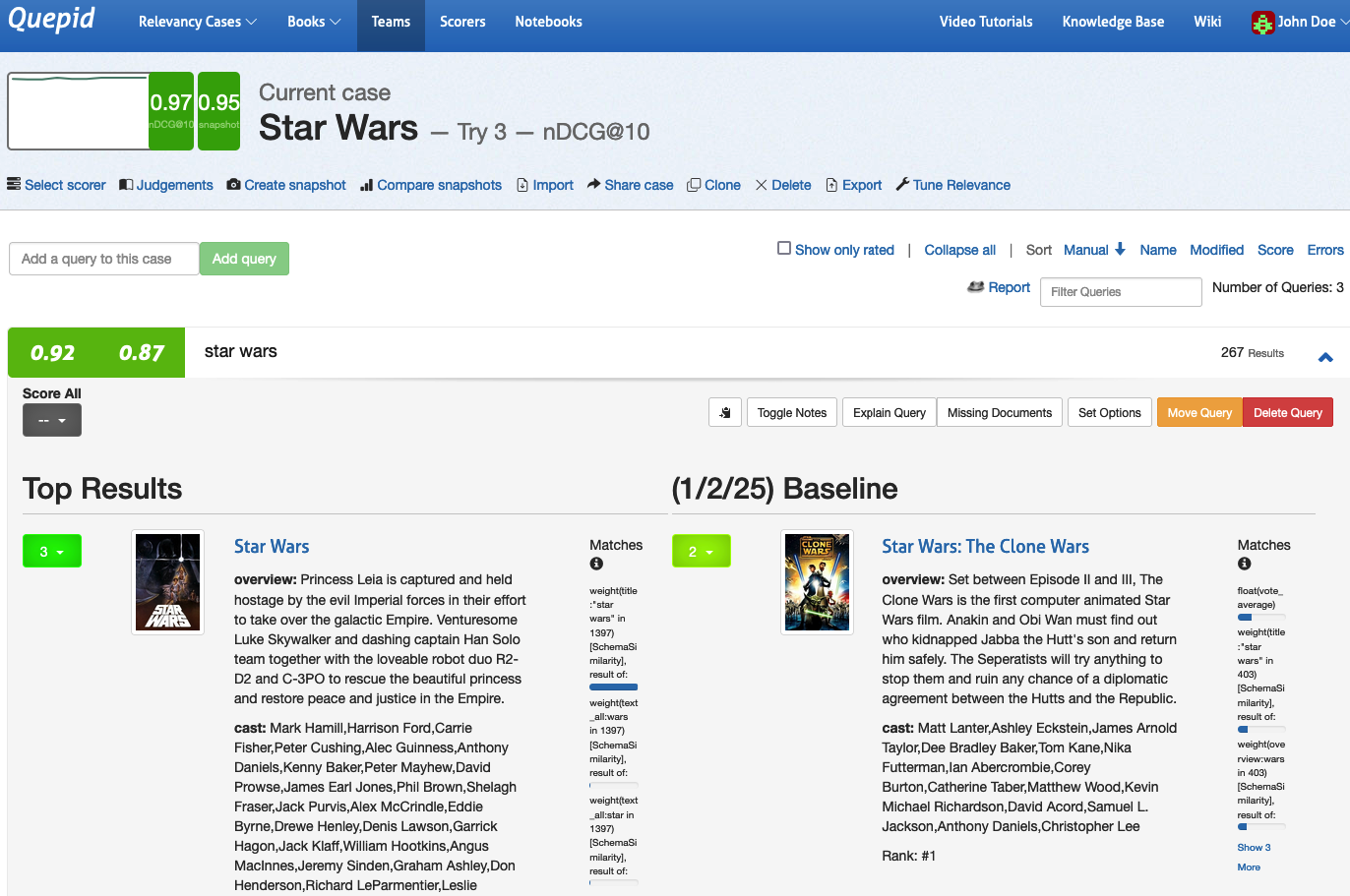

In the window that is shown, select the snapshot you want to compare against and click the _Update diff _settings button. Quepid will update the search results to show both sets to show the current search results against the previous snapshot.

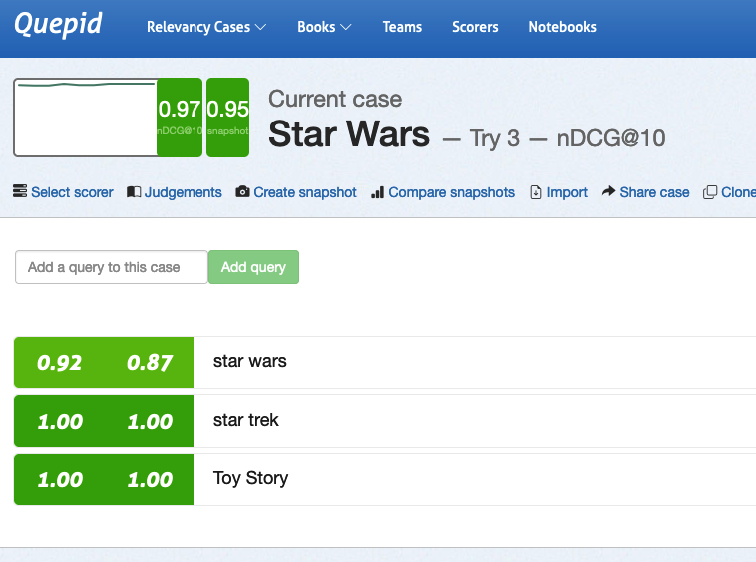

You can see that our current Try 3 results is on the left, and the previously created Snapshot Baseline is on the right. The overall Case score bumped up by 2 points from .95 to .97 as measured by nDCG@10.

You can see that this was due to a five point improvement in the star wars query. Opening the query, we can see that "perfect" movie Star Wars moved to the top in Try 3, and pushed down the "good" movie Star Wars: The Clone Wars from the first position.

To stop comparing snapshots, click the Compare snapshots link and select the Disabled option and click the Update diff settings button.

Snapshot comparison is a powerful tool for quickly visualizing the difference in your queries and will assist in your future search tuning efforts. The comparison of the search metrics between these two snapshots provides feedback to the search team! The snapshots can be used to measure progress over time as your search team works to improve your organization's search relevance.